Table of Contents

In recent months, OpenAI, the renowned AI research lab behind ChatGPT, has experienced a significant exodus of employees, particularly those committed to AI safety. This article delves into the reasons behind the departures of key figures like Ilya Sutskever and Jan Leike, the implications for OpenAI’s future, and the broader concerns about the company’s trajectory under CEO Sam Altman.

The Departure of Key Figures

Who Left and Why?

Since November, OpenAI Team has seen at least seven prominent safety-conscious employees leave the company. Among them were Ilya Sutskever and Jan Leike, leaders of the superalignment team. Their resignations raised eyebrows and sparked speculation about deeper issues within the company.

The Failed Coup

The turmoil began last November when Sutskever and the OpenAI board attempted to oust CEO Sam Altman, citing concerns about his transparency and trustworthiness. This coup failed dramatically as Altman, supported by President Greg Brockman, threatened to dismantle the company by taking top talent to Microsoft. This power struggle left a lasting impact on the company’s internal dynamics.

The Collapse of Trust

Mistrust in Leadership

Insiders reveal that the root of the problem lies in a growing mistrust in Altman’s leadership. Employees dedicated to AI safety felt increasingly sidelined as the company prioritized rapid commercialization over cautious development. Altman’s actions, including seeking funding from controversial regimes like Saudi Arabia, further eroded trust.

The Impact of Non-Disparagement Agreements

OpenAI’s practice of requiring departing employees to sign non-disparagement agreements compounded the issue. This practice effectively silences critics, making it difficult for the public to understand the true extent of the internal discord.

Voices of Dissent

Daniel Kokotajlo Speaks Out

One notable exception to the silence is Daniel Kokotajlo, who left OpenAI Team without signing the offboarding agreement. He openly criticized the company’s direction, highlighting the potential risks of training powerful AI systems without adequate safety measures.

Read More: Hofstra University Center for Civic Engagement Celebrates Community

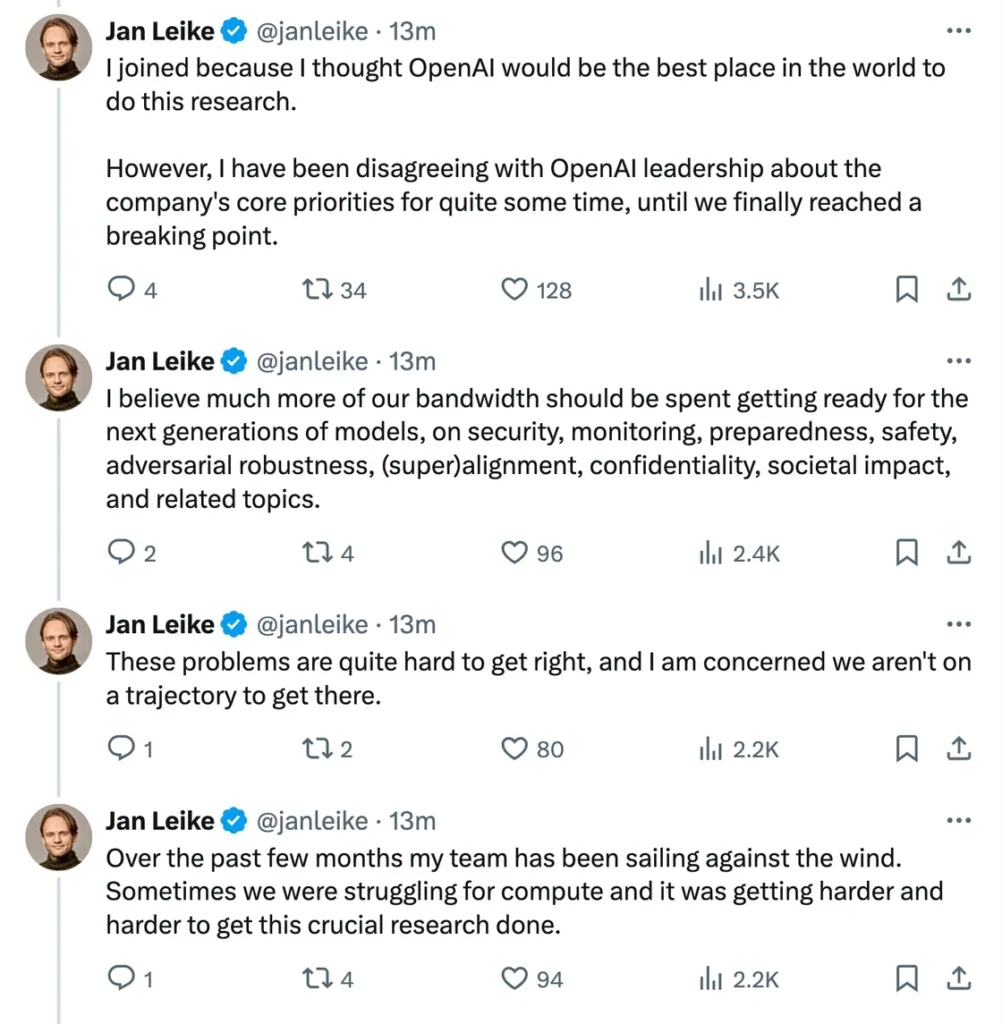

Jan Leike’s Resignation

Jan Leike, co-leader of the superalignment team, publicly announced his resignation, expressing deep disagreements with OpenAI Team priorities. His blunt departure underscored the growing discontent among those tasked with ensuring AI safety.

The Broader Implications

Eroding Safety Measures

With the departure of key safety personnel, there are concerns about the effectiveness of OpenAI’s safety protocols. The superalignment team, now led by co-founder John Schulman, is significantly weakened and struggling to maintain focus on long-term safety goals.

Commercialization vs. Safety

OpenAI’s aggressive push towards commercialization, including the development of new AI chips, raises questions about its commitment to safety. Critics argue that this focus on rapid growth could lead to the deployment of AI systems that are not thoroughly vetted for safety and ethical implications.

What Lies Ahead?

The Future of AI Safety at OpenAI Team

The erosion of the safety team at OpenAI Team poses a significant risk. Without a dedicated and robust team, the company’s ability to address the complex safety challenges posed by advanced AI technologies is compromised.

Community Concerns

The AI community and effective altruism movement, heavily involved in AI safety, express growing concern. The departure of prominent figures like William Saunders, who resigned under cryptic circumstances, further highlights the instability within OpenAI’s safety efforts.

Read More: Remembering Dickey Betts: Allman Brothers Co-Founder and Southern Rock

Conclusion

OpenAI Team recent internal strife and the departure of key safety-focused employees paint a troubling picture. The trust in leadership, crucial for fostering a culture of safety and responsibility, has been significantly undermined. As OpenAI Team continues its ambitious quest to develop advanced AI systems, the world watches closely, hoping that safety and ethical considerations will not be sacrificed on the altar of rapid technological advancement.

FAQs

1. Why did key safety personnel leave OpenAI?

Key safety personnel left OpenAI due to a loss of trust in leadership, particularly in CEO Sam Altman’s commitment to prioritizing AI safety over rapid commercialization.

2. What was the attempted coup at OpenAI?

The attempted coup involved Ilya Sutskever and the OpenAI board trying to oust CEO Sam Altman, citing concerns about his transparency. The coup failed, leading to significant internal strife.

3. How does OpenAI handle departing employees?

OpenAI often requires departing employees to sign non-disparagement agreements, preventing them from publicly criticizing the company. Those who refuse to sign risk losing their equity in the company.

4. What are the implications of the safety team’s departure?

The departure of key safety personnel weakens OpenAI’s ability to address long-term safety challenges and undermines trust in the company’s commitment to responsible AI development.

5. What is the future of AI safety at OpenAI?

With a weakened safety team and a strong focus on commercialization, there are concerns that OpenAI may struggle to adequately address the safety and ethical implications of advanced AI technologies.

1 thought on ““I Lost Trust”: Why the OpenAI Team in Charge of Safeguarding Humanity Imploded”